Techniques based on reinforcement learning have been used to solve a wide range of learning problems. However, most current reinforcement learning techniques work only in Markovian settings, where rewards, optimal actions and transitions between states are all dependent on the present state only. To extend their use to non-Markovian environments, several reinforcement learning models have been enhanced with working memory (Zilli and Hasselmo, 2008; Todd et al., 2009; Lloyd et al., 2012), which allows the learner to keep track of past events so that they can form part of the current learning context when making decisions. Such models have been shown to learn how to solve challenging non-Markovian tasks such as the 12-AX (Frank et al., 2001). However, one problem with these models is that they often assume that working memory capacity remains fixed throughout the task at hand. This seems implausible from a human learning perspective, since it means that a learner needs to choose the working memory capacity to allocate over the course of the task before embarking on it.

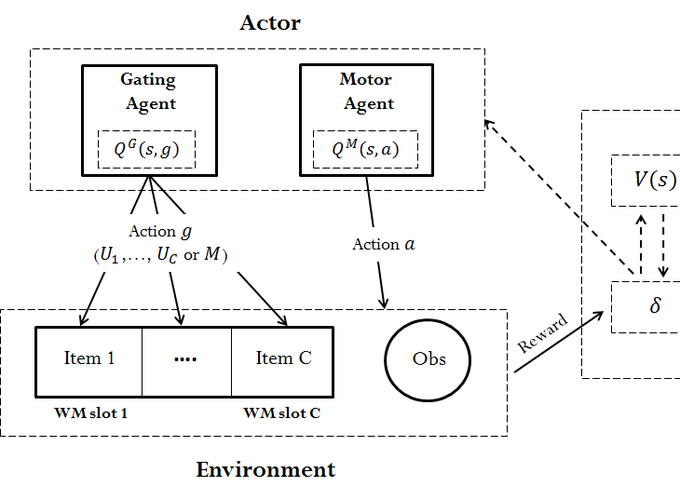

Here, we propose a model that gradually and automatically allocates working memory resources in response to the current accuracy rate, and show that the model deals well with the trade-off between learning speed and learning accuracy on the 12-AX task, in comparison with a standard model that uses a fixed working memory capacity.

References

Frank, M. J., Loughry, B., and O’Reilly, R. C. (2001). Interactions between the frontal cortex and basal ganglia in working memory: A computational model. Cognitive, Affective, & Behavioral Neuroscience, 1(2):137-160.

Lloyd, K., Becker, N., Jones, M. W., and Bogacz, R. (2012). Learning to use working memory: a reinforcement learning gating model of rule acquisition in rats. Frontiers in Computational Neuroscience, 6(87).

Todd, M. T., Niv, Y., and Cohen, J. D. (2009). Learning to use working memory in partially observable environments through dopaminergic reinforcement. In Advances in neural information processing systems, pages 1689-1696.

Zilli, E. A. and Hasselmo, M. E. (2008). Modeling the role of working memory and episodic memory in behavioral tasks. Hippocampus, 18(2):193-209.